In a data-driven era, data quality improvement is no longer optional—it’s critical. Businesses rely on accurate, complete, and high-quality data for decision-making, operational efficiency, and customer satisfaction. On the other hand, poor-quality data can lead to flawed decisions, inefficiencies, and compliance risks.

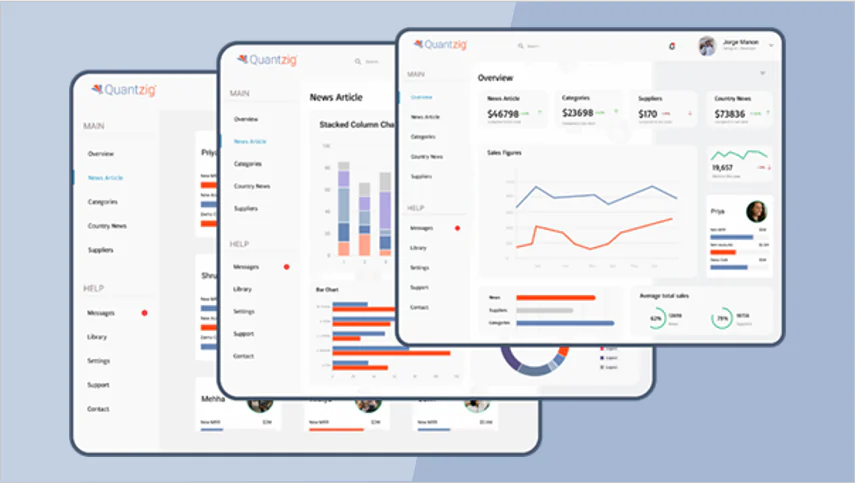

This case study explores the significance of data quality management, strategies for improvement, and how Quantzig’s advanced solutions have enabled enterprises to unlock the true potential of their data assets.

Key Wins with Data Quality Management

- Regularly analyze datasets using profiling and validation tools to identify and correct inconsistencies, ensuring data accuracy and completeness.

- Standardize formats, naming conventions, and classifications to enable seamless data integration across systems and departments.

- Establish robust data governance policies, monitor key quality metrics, and use dashboards to track and enhance data performance.

- Leverage AI-powered tools for automated data cleaning, deduplication, and enrichment to improve overall data quality and usability.

- Quantzig’s tailored data quality management solutions help businesses streamline processes, enhance data integrity, and unlock actionable insights for better decision-making.

Request a demo to experience the meaningful insights we derive from data through our retail sentiment analytical tools and platform capabilities. Schedule a demo today!

Request a Free DemoTable of Contents

Why Data Quality Matters

High-quality data is the foundation for successful business operations and strategy. Here’s why improving data quality is vital:

- Informed Decision-Making: Accurate and reliable data supports decisions that drive business growth.

- Operational Efficiency: Quality data eliminates redundancies, leading to optimized processes.

- Customer Experience: Tailored customer interactions rely on data enrichment and data profiling for personalization.

- Regulatory Compliance: With regulations like GDPR and CCPA, data completeness and data standardization are essential to mitigate compliance risks.

- Enhanced Analytics: Reliable data is critical for generating actionable insights from advanced analytics.

Challenges in Data Quality

Data from multiple sources, such as CRM systems, unstructured data, and external databases, often results in challenges like:

| Challenge | Impact on Data |

|---|---|

| Inconsistent Formats | Misalignment between datasets affects usability. |

| Data Redundancy | Duplicate entries inflate storage costs and skew analytics. |

| Incomplete Data | Missing values hinder effective decision-making. |

| Poor Data Governance | Lack of oversight results in non-compliance and inefficiency. |

| Data Integrity Issues | Errors or inaccuracies reduce trust in data-driven processes. |

Quantzig’s Approach to Data Quality Management

Quantzig partnered with a global pharmaceutical company to address pressing data quality challenges. Below is a detailed overview of the process:

Challenges Addressed

- Semantic Misalignment: Inconsistent terminologies, drug names, and metrics across databases.

- Classification Issues: Misaligned customer segments and distribution channels.

- Data Completeness Problems: Missing or incomplete records, outliers, and discrepancies.

- Format Inconsistencies: Non-standardized HCP IDs, contact details, and geographic identifiers.

Quantzig’s Solution

Quantzig implemented an end-to-end data quality enhancement framework powered by AI-driven automation and advanced data validation techniques:

| Solution Component | Description |

|---|---|

| Automated Cleaning | Used AI to detect and resolve inconsistencies. |

| Data Deduplication | Eliminated redundant records to enhance accuracy. |

| Semantic Mapping | Mapped inconsistencies using NLP algorithms for improved data integrity. |

| Workflow Automation | Automated unresolved issue reporting to stakeholders for manual intervention. |

| Role-Based Access | Ensured secure data handling and accountability. |

Implementation Highlights

- Automated Issue Classification: Leveraged data profiling and semantic mapping for categorizing data anomalies.

- Semantic Mapping: AI-powered modules resolved terminology discrepancies.

- Machine Learning Back-End: Achieved 95% accuracy in issue resolution, drastically reducing manual effort.

- Data Validation Workflows: Automated processes identified unresolved issues and alerted stakeholders.

Technology Stack

| Technology | Purpose |

|---|---|

| React | Built an intuitive, user-friendly interface. |

| Flask | API development for seamless system integration. |

| Amazon S3/Azure Blob | Data storage and management. |

| Hugging Face Models | NLP-based semantic mapping and similarity scoring. |

Business Impact

The implementation delivered measurable outcomes for the client:

- Improved Data Quality: Achieved a 98% data quality standard within five months.

- Cost Savings: Reduced manual intervention, saving $15 million annually.

- Efficiency Gains: Decreased manual data processing by 75%.

A Recent Client Story: Quantzig Transforming Data for Decision-Making

Client: A global pharmaceutical leader

Challenge: Poor-quality HCP data hindered decision-making and operational efficiency.

Solution

- Implemented data quality strategy to identify and resolve inconsistencies.

- Introduced an AI-driven data enrichment tool for standardization and cleaning.

- Developed a real-time dashboard to monitor data quality metrics.

Outcome

| Metric | Before Quantzig | After Quantzig |

|---|---|---|

| Data Usability | 50% | 98% |

| Manual Intervention | High | Reduced by 75% |

| Cost of Data Handling | $20 million/year | $5 million/year |

Read more: How Data Quality Management Drives Better Decision Making

Best Practices for Data Quality Management

To maintain high data standards and ensure business success, organizations should incorporate a comprehensive approach to data quality management. Here’s a detailed breakdown of best practices:

Best Practices

- Data Profiling and Validation

- Data Standardization

- Data Cleansing and Deduplication

- Data Governance

- Implementing Advanced Data Quality Tools

- Monitoring Data Quality Metrics

Data Profiling and Validation

Why it’s Important:

Data profiling and validation are crucial for uncovering errors, identifying outliers, and understanding the structure and quality of your datasets. These practices ensure your data is accurate, complete, and consistent before analysis or decision-making.

Best Practices:

- Conduct Regular Profiling:

Use profiling tools to analyze datasets for anomalies, including missing values, duplicate entries, or unexpected formats. - Validate Across Sources:

Compare and validate data from multiple sources to ensure alignment and resolve discrepancies. - Automate Validation Processes:

Implement automated tools to streamline error detection and ensure datasets meet predefined quality thresholds.

Tools and Techniques:

- Utilize data profiling tools like Talend or Informatica to identify inconsistencies.

- Set up automated scripts for validating large datasets.

Data Standardization

Why it’s Important:

Standardization ensures uniformity in data formats, naming conventions, and classifications, making datasets interoperable and easier to integrate.

Best Practices:

- Define Standards:

Establish a consistent framework for naming conventions, date formats, and categorical classifications across all departments. - Transform Data as Needed:

Use data transformation techniques to reconcile disparate formats from multiple systems. - Enable Collaboration:

Involve cross-functional teams to agree on standards and avoid siloed decision-making.

Example:

- Standardizing date formats (e.g., MM/DD/YYYY vs. DD-MM-YYYY) across systems ensures consistent reporting.

Data Cleansing and Deduplication

Why it’s Important:

Poor-quality data with redundancies or inaccuracies can skew analysis, waste storage resources, and reduce trust in insights.

Best Practices:

- Eliminate Redundancies:

Perform data deduplication to remove duplicate entries that inflate storage costs and mislead analytics. - Cleanse Continuously:

Implement advanced data cleaning practices to address inaccuracies, such as invalid email addresses or phone numbers. - Incorporate Validation Loops:

Establish checks during cleansing to confirm that the data retains its integrity post-cleaning.

Techniques:

- Use machine learning algorithms for detecting duplicates and automating cleanup processes.

- Cross-reference datasets to ensure completeness.

Data Governance

Why it’s Important:

A robust governance framework ensures data is handled securely, consistently, and in compliance with regulations. It also promotes accountability and accessibility.

Best Practices:

- Establish Clear Policies:

Create policies defining how data should be collected, stored, and used. - Implement Role-Based Access Controls (RBAC):

Restrict data access to authorized users only to enhance security and accountability. - Monitor Compliance:

Use tools to track adherence to governance policies and regulatory requirements like GDPR or CCPA.

Key Components:

- Data Ownership: Assign clear responsibilities for data stewardship.

- Audit Trails: Maintain logs to track data usage and access.

Implementing Advanced Data Quality Tools

Why it’s Important:

Manual data management is time-intensive and error-prone. Advanced tools automate repetitive tasks, ensuring scalability and accuracy in data quality initiatives.

Best Practices:

- Leverage AI-Powered Solutions:

Use tools with AI capabilities for tasks like data cleaning, data enrichment, and anomaly detection. - Integrate Across Systems:

Ensure tools can seamlessly integrate with existing CRM, ERP, or analytics platforms. - Focus on Real-Time Quality Checks:

Deploy tools that can perform validation and profiling in real time to avoid delays.

Popular Tools:

- Informatica Data Quality

- Talend Data Preparation

- Microsoft Power BI

Monitoring Data Quality Metrics

Why it’s Important:

Defining and tracking data quality metrics like accuracy, consistency, and completeness ensures continuous improvement.

Best Practices:

- Set Key Performance Indicators (KPIs):

Use metrics such as:- Accuracy: The percentage of error-free data.

- Completeness: The extent to which all required fields are filled.

- Timeliness: How current the data is.

- Establish Benchmarks:

Create baseline thresholds for acceptable data quality levels and measure against them. - Create Dashboards:

Use dashboards to visualize performance metrics and track improvements over time.

Tools for Monitoring:

- Leverage analytics platforms like Tableau or Power BI for tracking and reporting data quality KPIs.

Implementation Checklist for Data Quality Management

Data Profiling and Validation

Analyze datasets, identify inconsistencies, and validate across sources.

Data Standardization

Define uniform standards and use transformation techniques to align data.

Data Cleansing and Deduplication

Automate cleaning processes and remove duplicate records.

Data Governance

Implement RBAC and establish compliance monitoring frameworks.

Advanced Tools Integration

Deploy AI-powered tools for automation and real-time monitoring.

Metric Tracking

Define KPIs and track performance using interactive dashboards.

By adopting these detailed best practices, businesses can significantly enhance data accuracy, reliability, and usability, fostering improved decision-making and operational efficiency.

Quantzig’s Key Capabilities in Data Quality Enhancement

Quantzig’s solutions cater to a range of industries, offering tailored services for specific data challenges:

| Capability | Benefit |

|---|---|

| Data Validation | Ensures data accuracy through automated checks and balances. |

| Data Cleansing | Eliminates errors and inconsistencies for reliable insights. |

| Data Deduplication | Reduces redundancies, ensuring streamlined databases. |

| Data Standardization | Aligns data formats to enhance interoperability and usability. |

| Data Enrichment | Fills missing information to provide a comprehensive data landscape. |

| Data Governance Framework | Enables compliance and fosters better data management practices. |

Get started with your complimentary trial today and delve into our platform without any obligations. Explore our wide range of customized, consumption driven analytical solutions services built across the analytical maturity levels.

Start your Free TrialLooking Ahead: The Road to Data Quality Excellence

Quantzig’s solutions exemplify how data quality enhancement translates into tangible business outcomes. By prioritizing data accuracy improvement, operational efficiency, and compliance, organizations can unlock unparalleled value from their data assets.

Conclusion

Investing in data quality management isn’t just a technical necessity—it’s a strategic imperative. High-quality data enables better decision-making, boosts customer satisfaction, and ensures operational excellence. Quantzig’s expertise in data cleaning, data validation, and data transformation has empowered organizations to realize the full potential of their data.

Ready to transform your data ecosystem?

Connect with Quantzig today and redefine your data management strategies for lasting success.

Request a free pilot